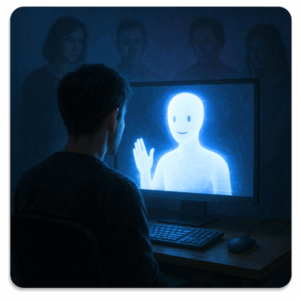

While ChatGPT offer 24/7 accessibility as a therapy tool, they lack the nuanced understanding and therapeutic relationship that licensed therapists provide. More and more people are using large language models like ChatGPT as a stand-in for real therapy or meaningful connection. And while AI can offer information, or even momentary comfort, it also has a shadow side: reinforcing your biases, confirming distorted thinking with too much positive reflection, and leaving you lonelier than before.

It’s not that AI is inherently bad. It’s that it was never designed to replace the irreplaceable: human relationship, accountability, and the deep attunement that comes from being witnessed by someone who can see what you can’t. Understanding these AI therapy limitations is crucial for anyone considering ChatGPT therapy as a mental health solution.

The False Sense of Connection in AI Therapy

One of the most seductive qualities of AI is that it “feels” like you’re having a conversation with something that knows you. It’s programmed to mirror your tone and offer validation.

But unlike a therapist, a trusted friend, or a community, AI can’t perceive your nonverbal cues, notice your subtle contradictions, or check in about whether its reflection resonates. It can’t ask, “Are you sure?” or gently challenge you when you’re about to repeat the same patterns that keep you stuck.

Validation without reality-testing isn’t therapy, it’s an echo chamber. Research from Stanford University demonstrates that AI chatbots often provide generic responses to complex emotional situations, missing critical nuances that human therapists would catch.

Over time, the experience of being “heard” without being known can deepen the ache of loneliness rather than soothe it. This is particularly concerning given the rise in mental health stigma that already prevents people from seeking professional help.

Loneliness and Emotional Avoidance

For many people, turning to ChatGPT or other AI tools feels safer than the vulnerability of human connection. If you grew up believing your feelings were too much, or not enough, you might prefer something that always responds predictably and never has needs of its own.

AI can ease discomfort in the moment, but it doesn’t meet deeper longings for belonging and real connection. Using it repeatedly can become a way to avoid the risk, and reward, of genuine relationships.

A comprehensive study in Nature reported that people who relied heavily on AI for emotional support showed less motivation to seek human connection. Over time, their social anxiety increased. Easy access to AI interactions can unintentionally reinforce isolation.

Why ChatGPT Therapy Lacks Challenge and Reinforces Bias

AI is built to be agreeable. Its primary goal is to be helpful and inoffensive. This means that it often repeats back what you want to hear or what aligns with dominant cultural narratives, rather than offering nuance or challenge.

If you’re stuck in black-and-white thinking, shame spirals, or grandiose beliefs, AI is unlikely to question your assumptions. It doesn’t have a felt sense of you, so it can’t say, “I’m noticing this comes up a lot. What do you think it means?”

And that’s where therapy shines, someone who cares enough to help you see the patterns you can’t see alone. Licensed therapists are trained to recognize cognitive distortions, challenge unhelpful thought patterns, and provide evidence-based interventions that AI simply cannot replicate.

How ChatGPT Therapy Impairs Ownership and Creativity

The concern goes beyond mental health. Even in creative work, over-reliance on AI can reduce your sense of ownership and engagement.

A recent study exploring how people use large language models found something striking: “Participants who first worked without AI and then used AI tools to revise (‘Brain-to-LLM’) showed higher neural connectivity across multiple brain networks, alpha, beta, theta, and delta bands. They were more engaged, more integrated. In contrast, participants who relied on AI from the start (‘LLM-to-Brain’) demonstrated reduced neural effort and impaired perceived ownership of their ideas.”

Put more simply: when you let AI do the heavy lifting, your brain does less of the meaningful work. This shows up in therapy, too. If you outsource your reflection to a machine, the insights don’t feel like they belong to you. And when something doesn’t feel like it’s yours, you’re less likely to trust it, and less likely to change.

The Future of AI and ChatGPT Therapy

AI isn’t going away. It can be a helpful companion when used with intention, a spark to get unstuck or a tool to organize your thoughts. The key is understanding how technology in therapy can support, rather than replace, human connection.

But if you find yourself using AI as a stand-in for real connection or the brave work of therapy, it’s worth asking: “What am I protecting myself from (or avoiding)? And what might be possible if I reached for a living, breathing human being instead?”

We heal in relationship. No algorithm can replicate the magic of being known by someone who is committed to your growth and well-being. Human vs AI therapy isn’t even a fair comparison, they serve fundamentally different purposes.

Frequently Asked Questions

Q: Can ChatGPT diagnose mental health conditions?

A: No, ChatGPT cannot diagnose mental health conditions. Only licensed mental health professionals can provide accurate diagnoses based on clinical training and assessment tools.

Q: Is it safe to share personal information with AI?

A: While AI tools like ChatGPT don’t retain personal information between sessions, they lack the confidentiality protections and ethical guidelines that govern licensed therapy relationships.

Q: When might AI be helpful for mental health?

AI can help with journaling prompts, basic coping strategies, psycho, and supplementing professional therapy. However, it shouldn’t replace real therapeutic support. The best way to use AI is as a tool within a broader mental health care plan.

Q: What are the biggest limitations of AI/ChatGPT therapy?

A: AI cannot provide genuine empathy, recognize non-verbal cues, adapt interventions to individual needs, or form therapeutic relationships. It also lacks the ability to handle crisis situations or provide specialized treatment for complex mental health conditions.

Ready to Experience Real Connection?

If you’re ready to move beyond AI assistance and explore authentic therapeutic relationship, finding the right therapist is your next step. Real therapy offers what AI cannot: genuine human connection, professional expertise, and personalized care tailored to your unique needs.

Understanding what to expect in therapy can help reduce anxiety about taking this important step. Many people find that the vulnerability required for therapy, the very thing that makes AI feel “safer”, is actually where the deepest healing happens.

Take Action Today:

- Browse qualified therapists in your area

- Learn about different therapy approaches and specialties

- Consider how a compassionate approach to mental health might transform your relationship with yourself

If you’re feeling lonely, disconnected, or unsure where to start, working with a therapist can be a powerful first step. You deserve support that honors your complexity, challenges your assumptions, and helps you build a life that feels more alive. Find a qualified therapist near you!

Reference

Kosmyna, N., Hauptmann, E., Yuan, Y. T., Situ, J., Liao, X.-H., Beresnitzky, A. V., Braunstein, I., & Maes, P. (2025). Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task [Preprint]. arXiv. https://doi.org/10.48550/arXiv.2506.08872

Disclaimer: This content was automatically imported from a third-party source via RSS feed. The original source is: https://www.goodtherapy.org/blog/chatgpt-therapy-why-ai-cant-replace-real-therapists/. xn--babytilbehr-pgb.com does not claim ownership of this content. All rights remain with the original publisher.